Workflows and Agents

This guide reviews common patterns in agent systems. It may be helpful to distinguish between "workflow" and "agent" when describing these systems. Anthropic provides a good explanation of one way to think about this distinction:

A workflow is a system that orchestrates large language models (LLMs) and tools through predefined code paths. An agent, on the other hand, is a system where the LLM dynamically directs its own process and tool usage, maintaining control over how the task is completed.

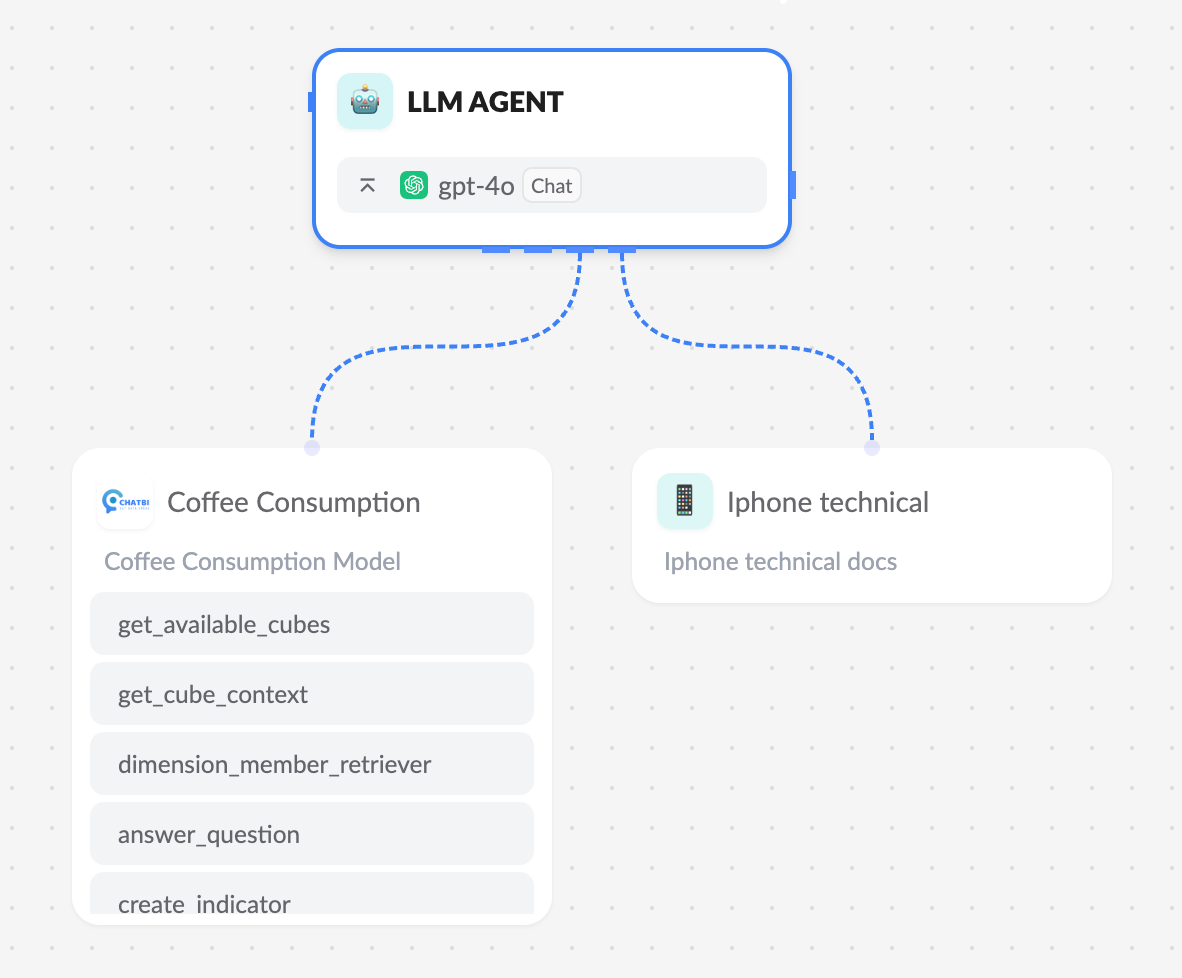

You can use any chat model that supports structured output and tool calls. Below, we show how to implement common agent system patterns on the Xpert AI platform.

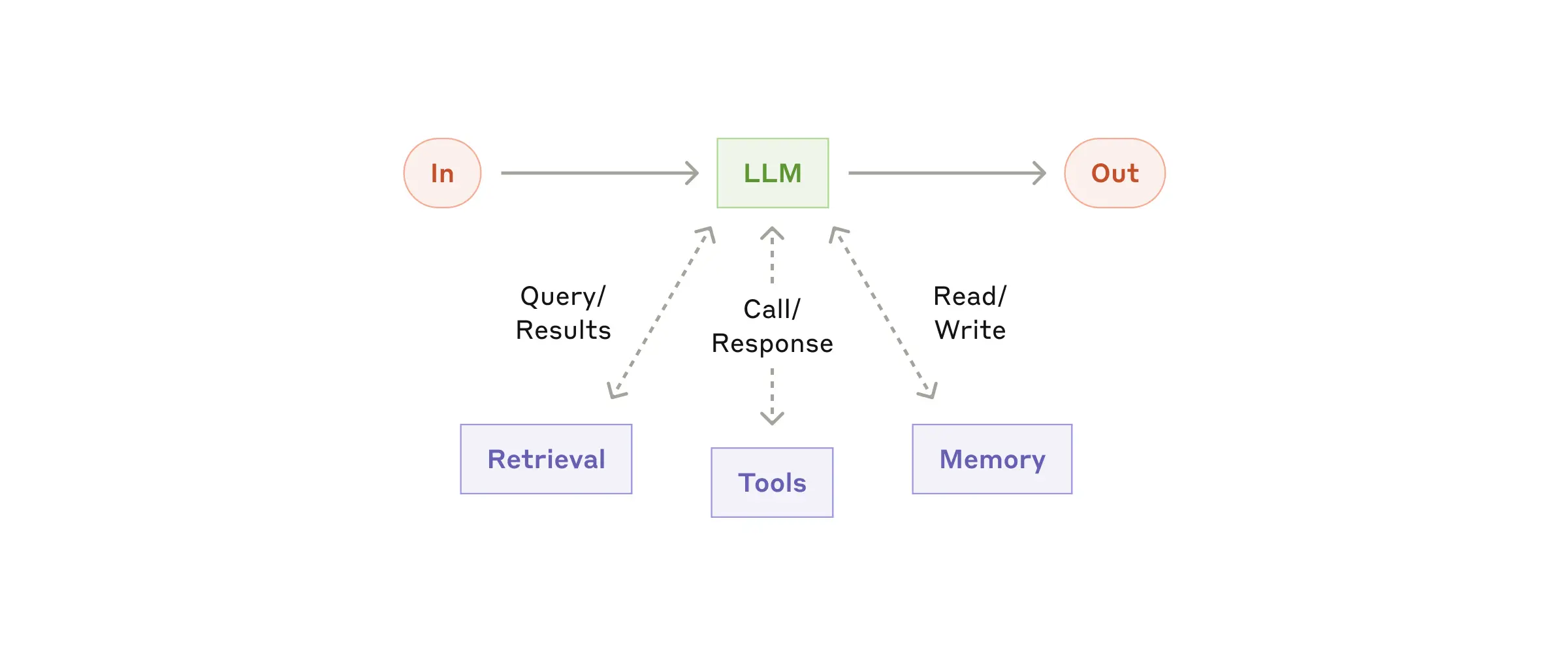

Building Block: Augmented LLM

LLMs have enhanced capabilities that support building workflows and agents. These include structured output and tool calls, as shown in this image from the Anthropic blog:

Prompt Chaining

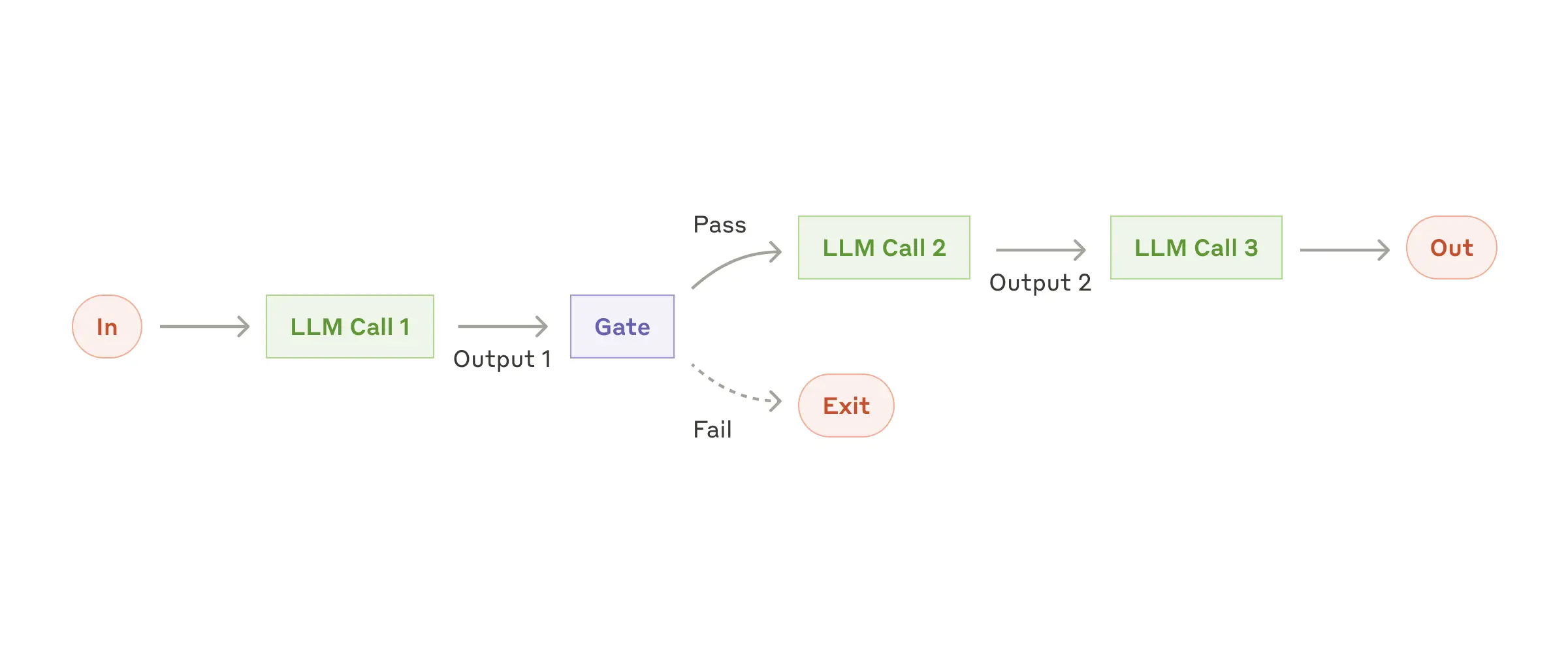

In a prompt chain, each LLM call processes the output from the previous call.

As mentioned in the Anthropic blog:

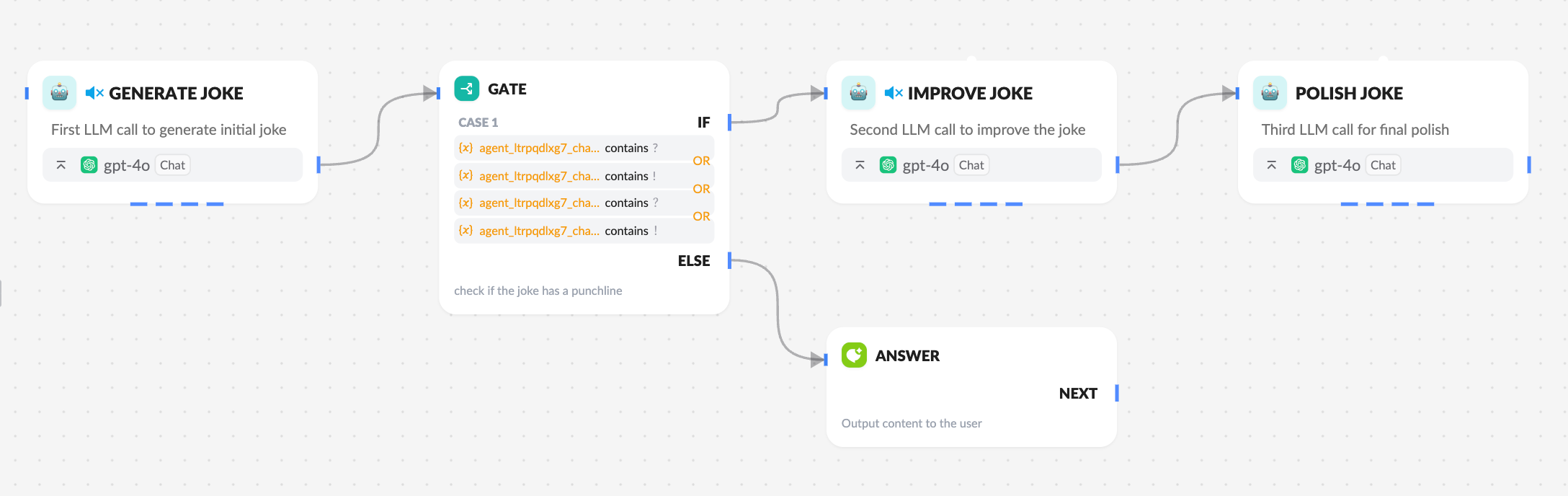

A prompt chain breaks a task into a series of steps, where each LLM call processes the output of the previous one. You can add program checks at any intermediate step (see the "gate" in the image below) to ensure the process stays on track.

When to use this workflow: This workflow is ideal for tasks that can be easily and clearly broken down into fixed sub-tasks. The main goal is to exchange higher accuracy for slightly higher latency by simplifying each LLM call.

On the Xpert AI platform, the prompt chain is implemented using agent nodes, routing, and responses. Note that if the routing executes the prompt chain to the final agent node, the stream output of the last agent can be returned to the user. If the route exits, the specified variable or content is returned to the user via the response node.

Reference Template: Workflow Prompt Chaining.

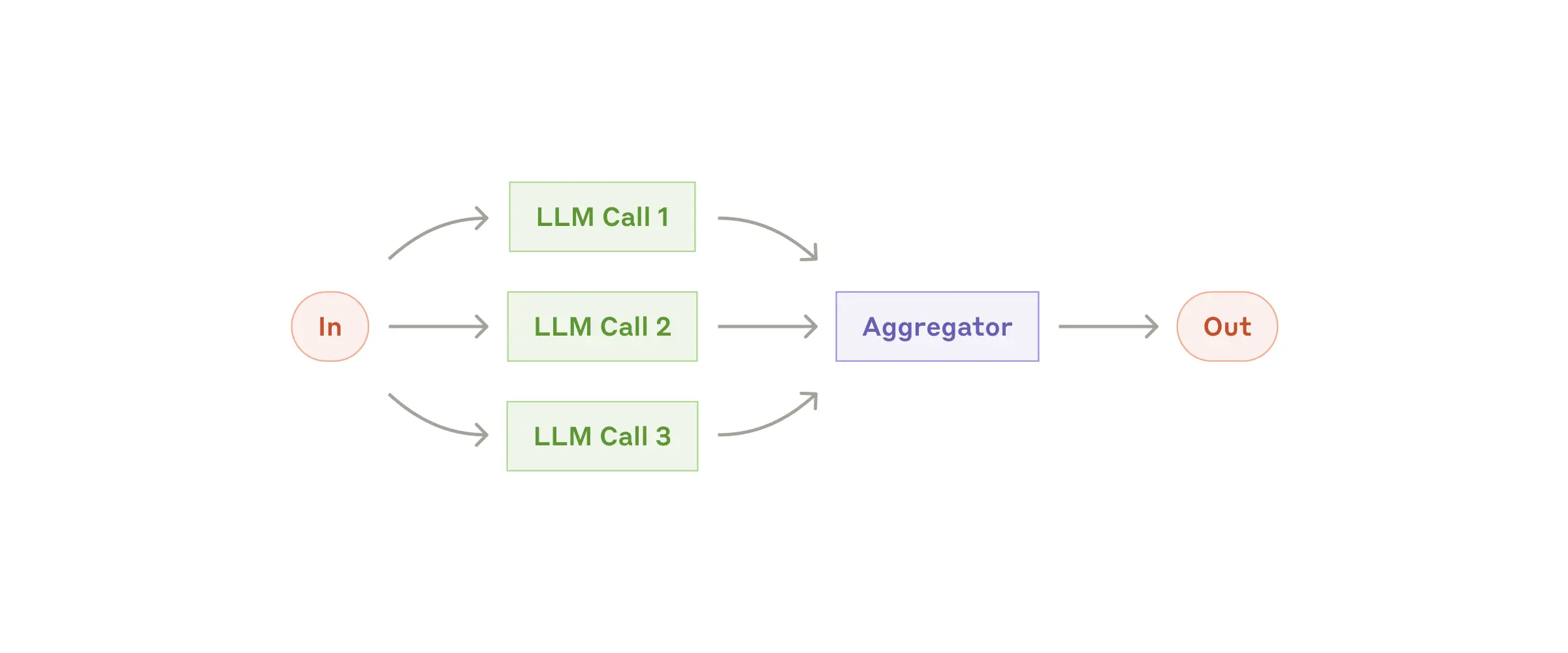

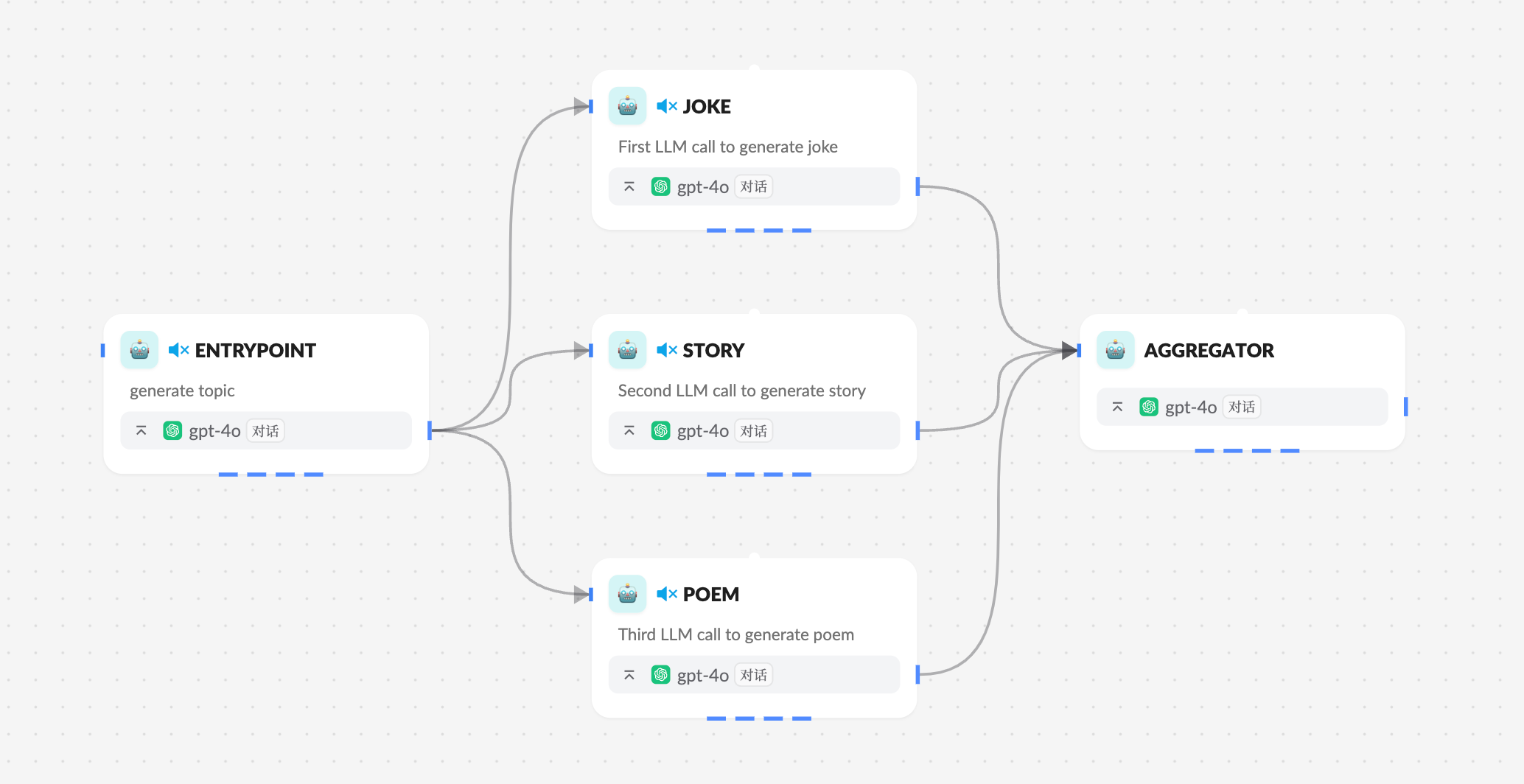

Parallelization

With parallelization, large language models can handle a task simultaneously:

Large language models can sometimes handle a task simultaneously and aggregate their outputs programmatically. This workflow, called parallelization, comes in two key variants: segmentation (breaking a task into independent sub-tasks that run in parallel) and voting (running the same task multiple times to get diverse outputs).

When to use this workflow: Parallelization is effective when sub-tasks can be processed in parallel to improve speed, or when multiple perspectives or attempts are needed to achieve more confident results. For complex tasks that involve multiple considerations, large language models often perform better when each consideration is handled by a separate model call, allowing for focused attention on each specific aspect.

On the Xpert AI platform, you can implement parallelized agent workflows by directly adding multiple agent nodes as subsequent nodes. In addition to agent nodes supporting parallel subsequent points, iteration nodes also support parallelized subsequent nodes.

Reference Template: Workflow Parallelization.

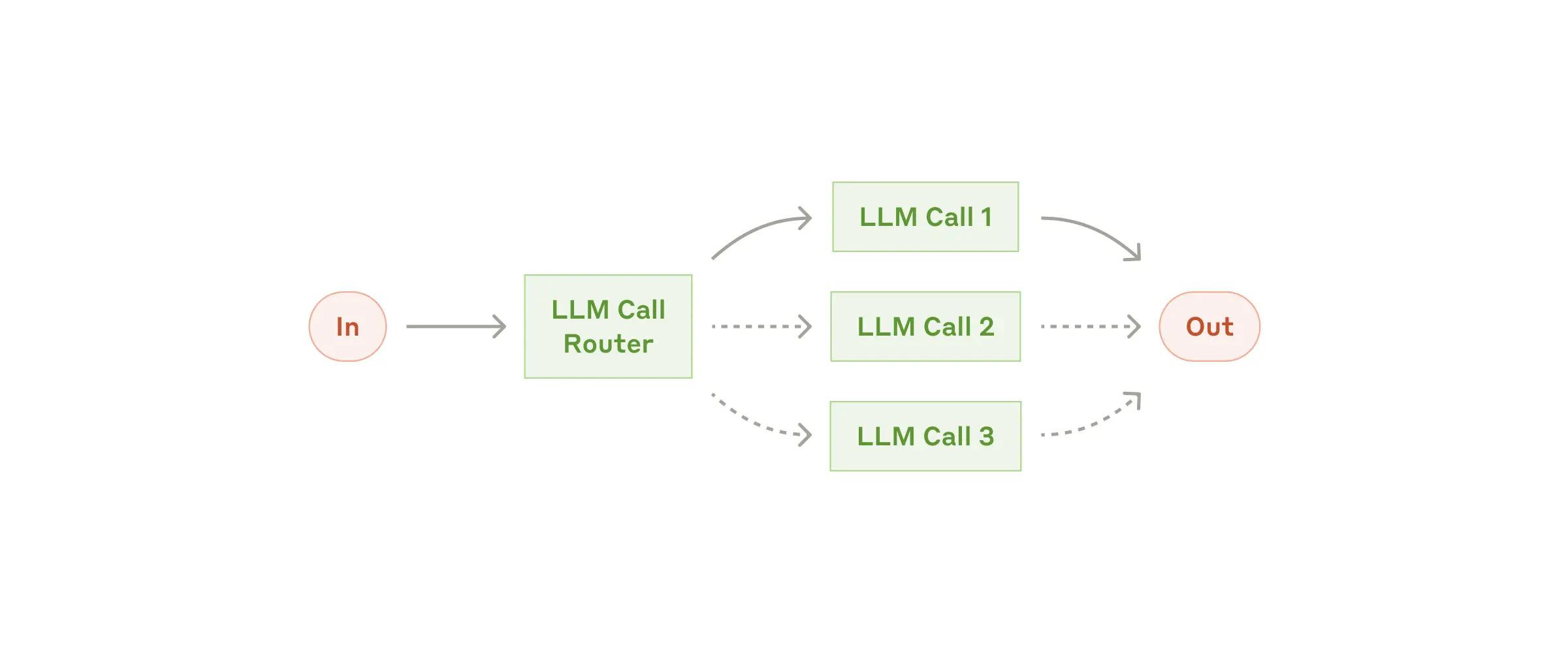

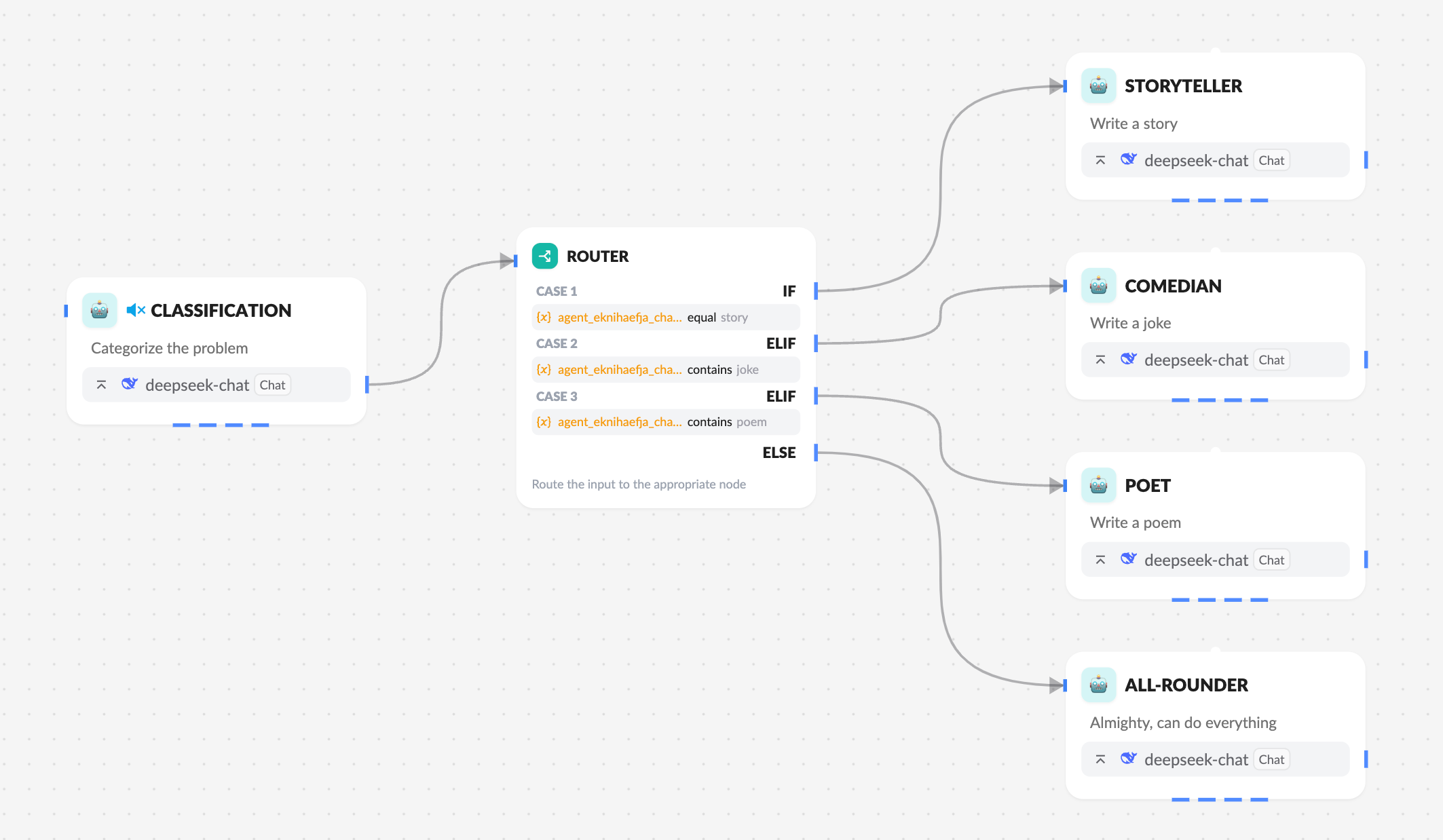

Routing

Routing classifies inputs and guides them to subsequent tasks. As mentioned in the Anthropic blog:

Routing classifies inputs and directs them to specialized subsequent tasks. This workflow allows for focus separation and the construction of more specialized prompts. Without it, optimizing for one type of input might impair performance for others.

When to use this workflow: Routing is suitable for complex tasks where there are clearly different categories that should be handled separately, and classification can be accurately performed by a large language model or more traditional classification models/algorithms.

On the Xpert AI platform, the routing workflow can be implemented using conditional branches by adding multiple conditions and assigning subsequent nodes to each condition.

Reference Template: Workflow Routing.

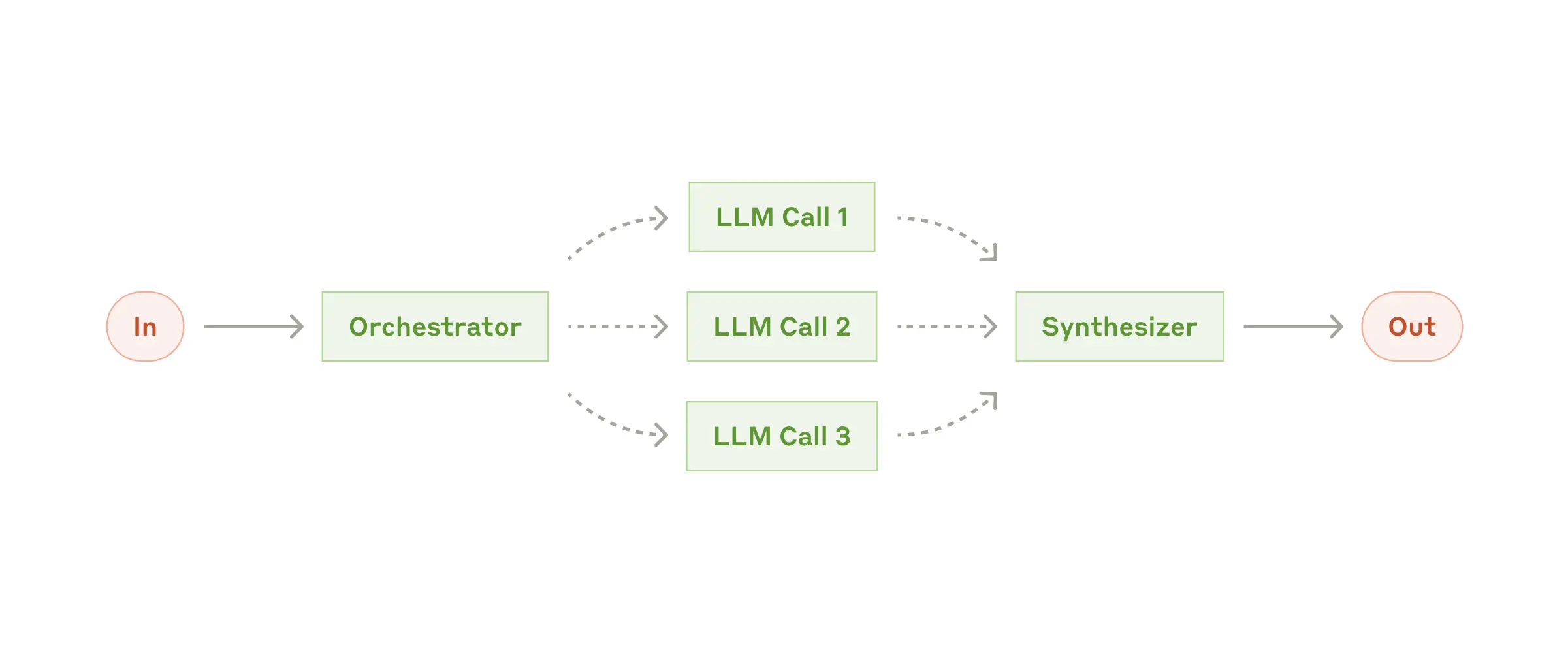

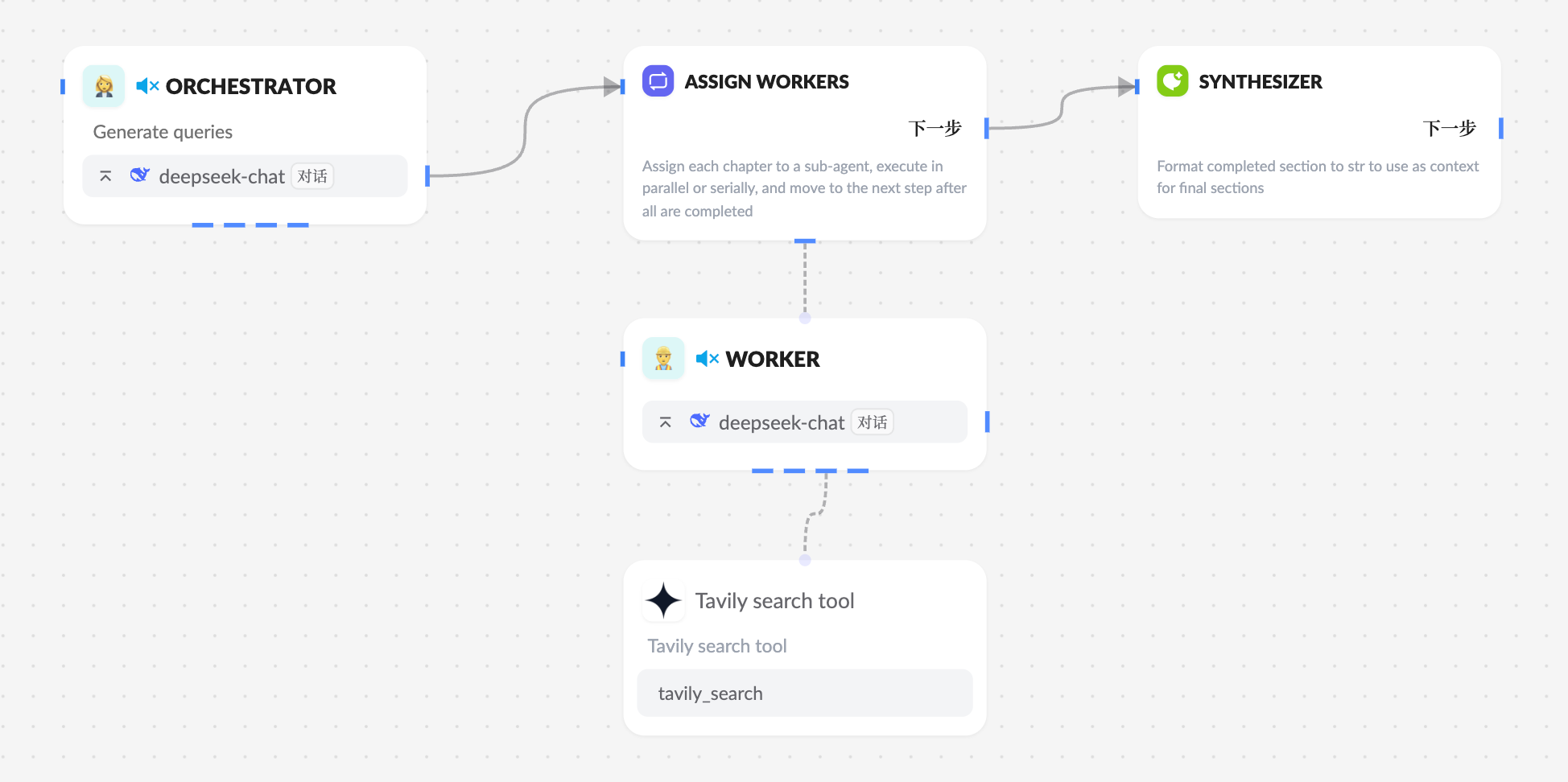

Orchestrator-Worker

In the orchestrator-worker pattern, a coordinator breaks down tasks and delegates each sub-task to workers. As mentioned in the Anthropic blog:

In an orchestrator-worker workflow, a central large language model dynamically breaks down a task, delegates it to worker large language models, and integrates their results.

When to use this workflow: This workflow is ideal for complex tasks where the required sub-tasks cannot be predicted (e.g., in coding, the number of files that need changes and the nature of each change likely depend on the task). While similar to parallelization in topology, the key distinction lies in its flexibility—the sub-tasks are not predefined but are decided by the coordinator based on the specific input.

On the Xpert AI platform, you can implement the orchestrator-worker agent workflow using iteration logic nodes. The iteration node can process each element of an array by calling a sub-agent workflow, executing sequentially or concurrently (with a limit set) for each sub-task. After all elements are processed, the result array is returned, and subsequent nodes are executed.

Reference Template: Workflow Orchestrator-Worker.

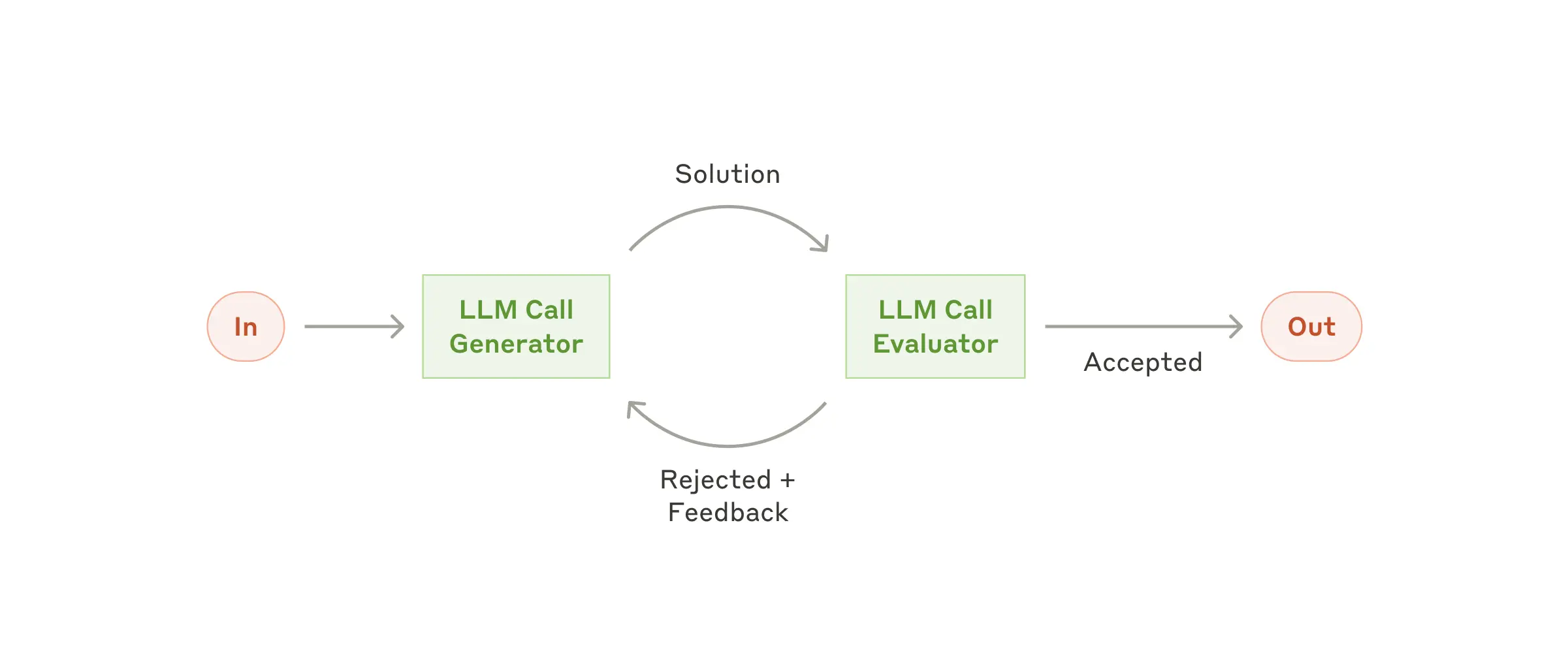

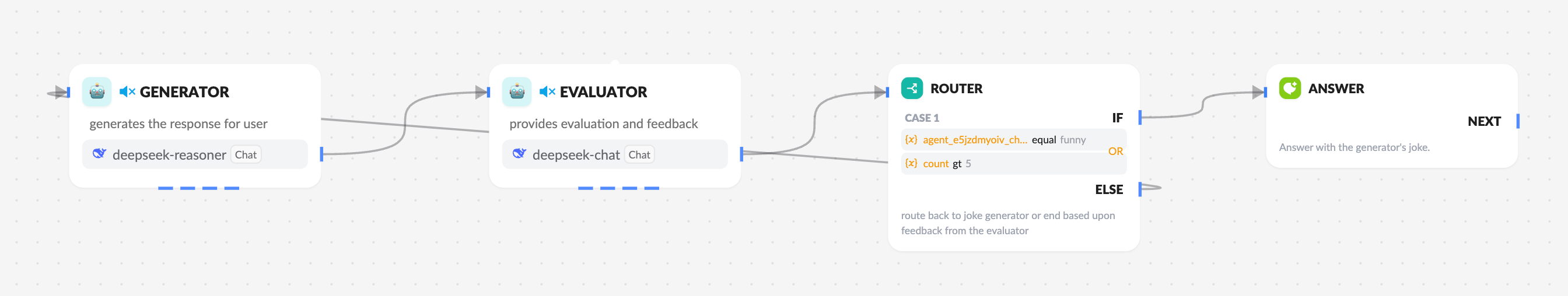

Evaluator-Optimizer

In an evaluator-optimizer workflow, one large language model (LLM) generates a response, and another provides evaluation and feedback in a loop:

In the evaluator-optimizer workflow, one large language model (LLM) generates a response, while another provides evaluation and feedback in a loop.

When to use this workflow: This workflow is especially effective when we have clear evaluation criteria, and iterative optimization provides measurable value. Two key indicators for using this process are: first, when human feedback significantly improves the LLM's responses; and second, when the LLM can provide such feedback. This is similar to the iterative writing process that human writers go through when refining a document.

On the Xpert AI platform, you can implement the evaluator-optimizer agent workflow by using the agent's structured output along with routing logic.

Reference Template: Workflow Evaluator-Optimizer.

Conclusion

Success in the field of large language models is not about building the most complex system, but about building the right system for your needs. Start with simple prompts, optimize them through thorough evaluation, and only add multi-step agent systems when simpler solutions are insufficient.

When implementing agents, we try to follow three core principles:

- Keep the agent design simple.

- Prioritize transparency by clearly showing the agent’s planned steps.

- Carefully design your agent toolset through thorough documentation and testing.